Local LLM with OpenCode

How to configure OpenCode to use any OpenAI-compatible API endpoint, such as a local vLLM server.

Read article →A running stream of posts on software engineering and technical practices.

Showing posts tagged “vLLM”.

How to configure OpenCode to use any OpenAI-compatible API endpoint, such as a local vLLM server.

Read article →

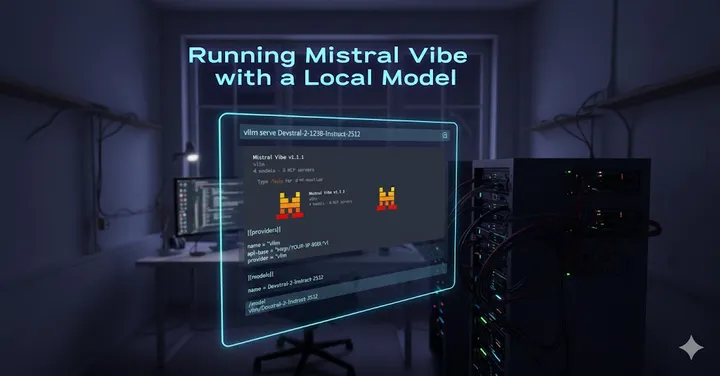

A practical guide for configuring Mistral Vibe to use local models such as Devstral-2-123B via vLLM.

Read article →

A complete step-by-step guide for installing vLLM, configuring systemd, setting up virtual environments, and troubleshooting GPU-backed inference servers.

Read article →